Building AI apps using GPT, Claude and Cloudflare

Learn how to build AI apps using ChatGPT and Claude APIs.

Artificial Intelligence has transformed the way we interact with technology. One of the key innovations enabling this AI revolution is Large Language Models (LLMs). LLMs are powerful natural language processing systems that can understand text, generate human-like writing, and even converse in natural language.

In this article, we'll explore how we can leverage LLMs to quickly build intelligent applications through APIs offered by companies like OpenAI, Anthropic, and Cloudflare. These capabilities just take a few lines of code. The best way to understand a new technology is by building things on it. So let's get started.

Large Language Models

This AI automations craze is possible, of course, because of Large Language Models or LLMs. LLMs are blackboxes which take in a series of tokens as an input, and try to predict what the next set of tokens should be. The major openly available models on the internet which you can pay for and use are -

- OpenAI GPT APIs

- Claude

- Cloudflare AI

By using these models, the flexibility you get in the number of AI-powered applications one can build is endless. I've been experimenting on these a lot myself! More on that in a bit.

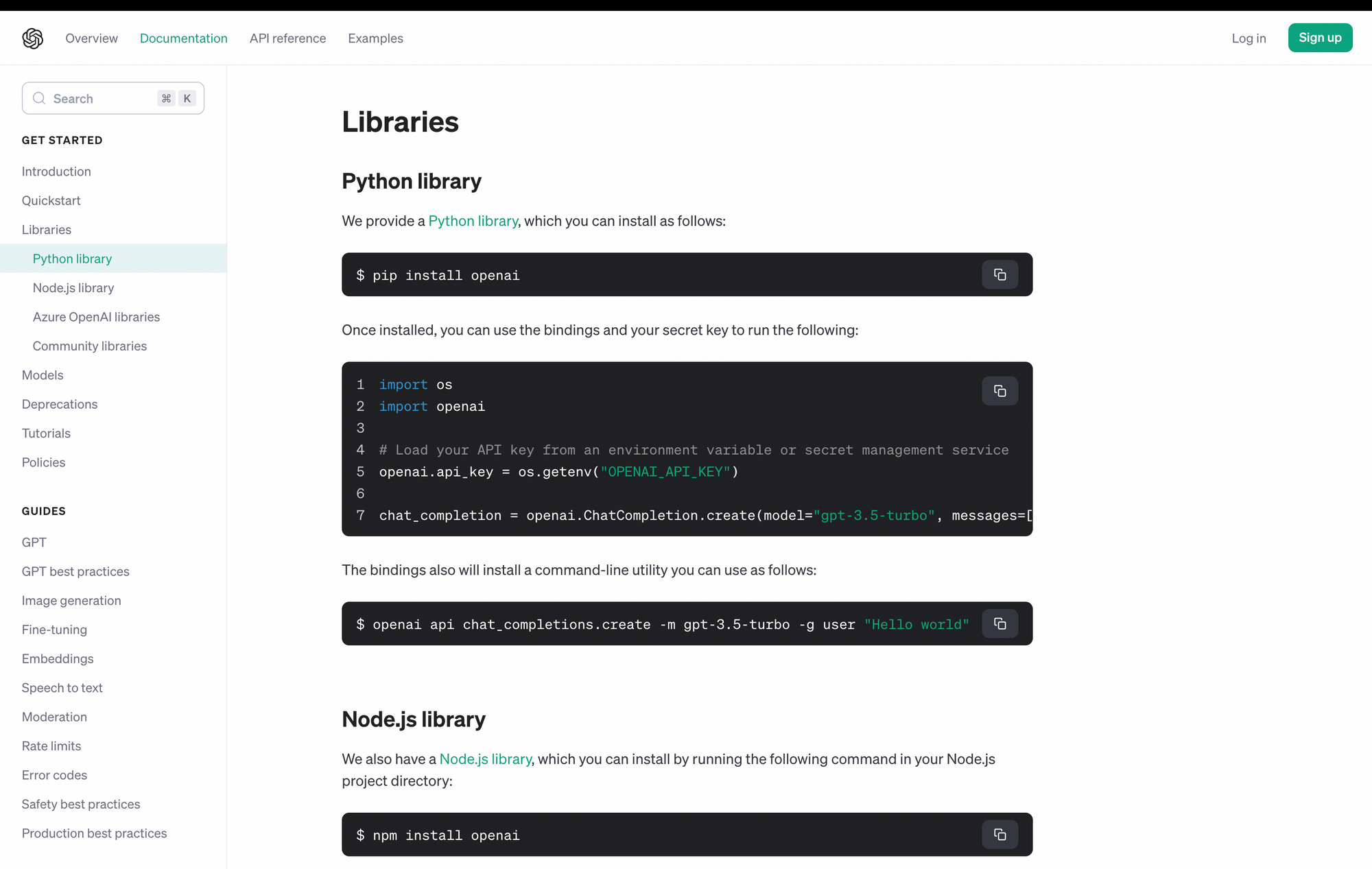

OpenAI

Firstly, let's look at the kinds of APIs they provide. Let's look at OpenAI first. They provide first-party SDKs for NodeJS and Python. I've used both, and unfortunately, because of the nature of the LLM landscape and how fast things move, it's always recommended to go with first-party SDKs. Things move too fast for third-party ones to catch up.

The 2 main APIs they provide are completions and function calling.

Completions

Right from OpenAPI's docs, "Chat models take a list of messages as input and return a model-generated message as output.". To understand what this means, take a look at the example below.

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}],

});As a dev, we have to pass all of the relevant messages of a conversation to GPT, which involves the following - system messages to define GPT behavior, user messages which define the queries users ask, and assistant messages, ie, responses from the API in the history.

This context is what allows GPT to maintain a history of the conversation and predict the next output with better accuracy. Try it on your own using the docs!

Function Calling

The other API one can use for building AI apps is function calling. This one allows you to define functions accessible by GPT in the JSON format described by OpenAI. Once you pass in the data and also write some glue code, GPT can then "call" functions in your codebase to get data as needed (it just tells you what you need to do, you still need to call the functions programmatically).

There's an example of a weather calling API on the docs pages, but I'm going to create a better one soon!

const functions = [

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

];Claude

Anthropic has released its own competitor to OpenAI's GPT APIs - Claude. Claude is faster, cheaper, and more flexible in terms of data formats it accepts and the amount of tokens it supports (9,999!). This can make it a better alternative to GPT's APIs. However, it can struggle in terms of accuracy, and also lags behind in features when compared to GPT. Still, if you want something fast and cheap, Claude can get the job done!

Claude also has first-party SDKs for Python and Typescript, which as I said previously, are recommended over third-party ones. Although, in this case, Claude's single API has remained quite stable over the past year, so feel free to choose any.

Looking up their prompting technique, it's pretty obvious what they expect. A human-assistant loop where Claude's messages are tagged with assistant. This is unlike the GPT APIs where the system messages are tagged as system - which allows you to set context for how the assistant should reply to the user. Believe it or now, Claude's docs address this concern and many others as well, so be sure to give them a read!

Finally, the data you send with the API.

const completion = await anthropic.completions.create({

model: 'claude-2',

max_tokens_to_sample: 300,

prompt: `${Anthropic.HUMAN_PROMPT} how does a court case get to the Supreme Court?${Anthropic.AI_PROMPT}`,

});Cloudflare AI

Cloudflare have recently launched their own AI services suite. For LLMs, they are using Llama 2 7B internally (update: they included Mistral 7B as well). With a limited context size of 2048, you'll be relying more on external embeddings stores for real-world usecases.

The prompt structure matches that of OpenAI, with system, user and assistant prompts.

const messages = [

{ role: 'system', content: 'You are a friendly assistant' },

{ role: 'user', content: 'What is the origin of the phrase Hello, World' }

];

const response = await ai.run('@cf/meta/llama-2-7b-chat-int8', { messages });

It's free for the introductory period as well. Check it out!

Conclusion

Building web applications doesn't have to be scary, and should be easy to figure out. Hopefully, this article gives you the right footing to get started, and go out there and build your own thing!

Btw, I've been experimenting with my own tool - how. It's an AI powered CLI tool which walks you through complex terminal interactions, holding your hand through all the steps. Check the demo out below.