Scrape and combine multiple web-pages

How to combine multiple posts to generate a summarized output.

Hey folks, you might have read my previous article on Data Engineering, where I solved the problem of not having a good website, app or service to quickly edit JSON data. In this article, I'm going to show you how I generated summaries of web-pages and blogposts to be read at ease on my Kindle. Let's roll!

The What: Scraper-pro-max is a small script which allows you to quickly scrape multiple web-pages in sequence, and dump their main content into a single HTML file. The benefits of this are being able to quickly skim through the articles on a blog or web-page, having an offline copy of a blog, or sending the data to any e-reader. The script is in plain Node.JS, so you can do whatever you want with the output being generated as well.

The Need: The complete need to solve this problem comes from the way I read articles.

- I pick up the articles sent to me either from my Telegram bot, Twitter or an RSS Feed, and open them on FireFox Mobile.

- If they're short and relevant, I read them then and there. Otherwise, I let them sit in the backlog and archive themselves. If I come back and still want to read them, I send them to my Kindle via a service like Reabble.

However, there was a problem whenever I found a new blog or website I liked. For eg, overreacted is an excellent series of posts on React by Dan Abramov, or untools is an excellent series of posts for better thinking.

Instead of letting these posts sit in my backlog, I needed an easy way of pushing these to my Kindle or quickly skimming through them.

The Solution: After facing this problem multiple times, with multiple blogs, I had had enough. Through work on my Telegram bot, and experimentation on extracting the core of an article, along with its reading time, I had gained a little experience in using Mozilla's Readability library. This library allows you to parse any HTML file, and extract its core content in either HTML or text format.

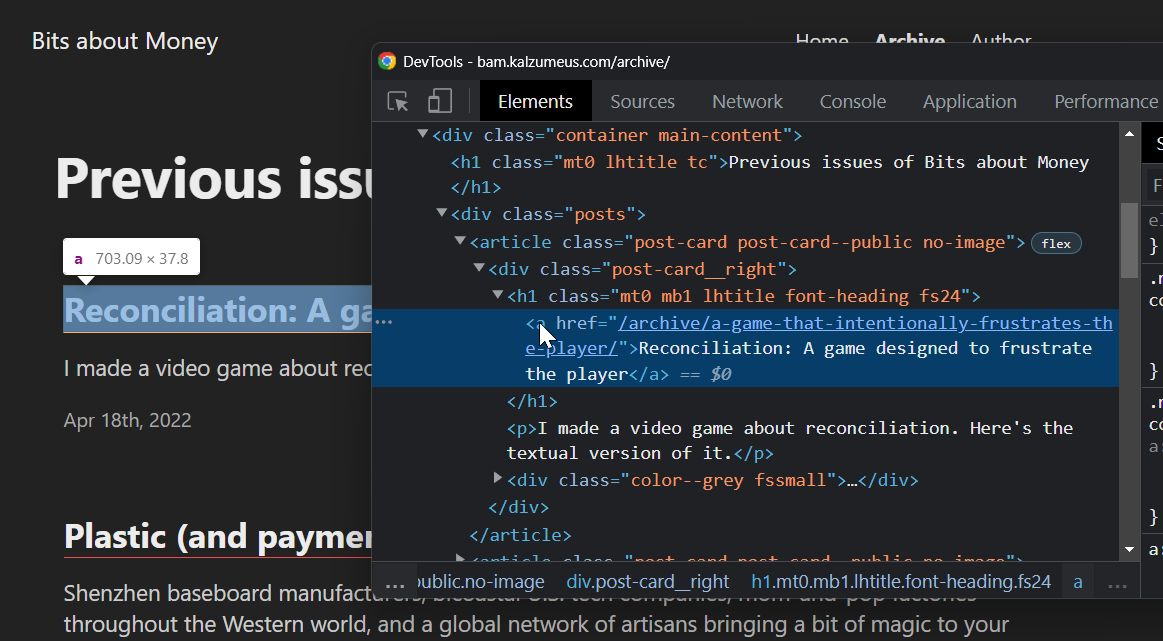

The input was also straightforward, as Node.JS scripts can import/require JSON files directly. Hence, I decided to take user input from a links.json file. Getting links for this file can be as simple as running a document.querySelectorAll query on a webpage.

querySelectorAll on a webpage.After you put in the links into links.json, here's what the rest of the script does:

- Iterate over each link in the data file.

- Fetch the raw HTML for the link.

- Generate a server-side DOM for the HTML via

JSDOM, and attachReadabilityto it. - If Readability is able to parse the blog, it's appended synchronously to the

output.htmlfile.

If your code runs without any errors, you get an HTML file with all the data at the end. You can use this data to skim the blog, store it in a archive, or send it to an e-reader :)

Feel free to expand upon this project on GitHub, and reach out to me with your thoughts on Twitter.